I used a simple deep network architecture, trained entirely on simulated data, to infer the 2D image coordinates of projected 3D bounding boxes, followed by perspective-n-point (PnP) for the segmentation of camera data. Its called DOPE – Deep Object Pose Estimation System.

Followed these Methodologies:

1.Created a 3D CAD models in solidworks in (.stl/.ply format).

2.Imported in the BLENDER to give color, material, unit scales etc to object and export as (.fbx) file.

3.Imported the blender file in UNREAL ENGINE (v4.22).

4.Created the Dataset by capturing using NDDS plugin.

5.Trained the custom created dataset in CNN by Deep learning.

6.Saved the weights of the training for further System Integration.

Creation of Custom objects using the Blender toolMake a 3D model in solidworks or CAD etc.,then import a 3D model in Blender (.stl .dae ...) and export it as a fbx file then import that fbx file into UE4, fbx file is work in UE4.

For more, __Download____[[Blender]]__

Blender Setup for Creating the 3D Model object:

STEP 1 - Download the NDDS Documentation, wget (NDDS) Documentation

STEP 2 - Installing & RUN the NDDS : Open the Unreal Editor with the Dataset_Synthesizer/Source/NDDS.uproject, a default level called TestCapturer will load as indicated at the top left hand corner of the 3D view port. This level has a sample scene with a basic simulation capture set up.

STEP 3 - Train the Created Custom model: Training code is also provided - train.py

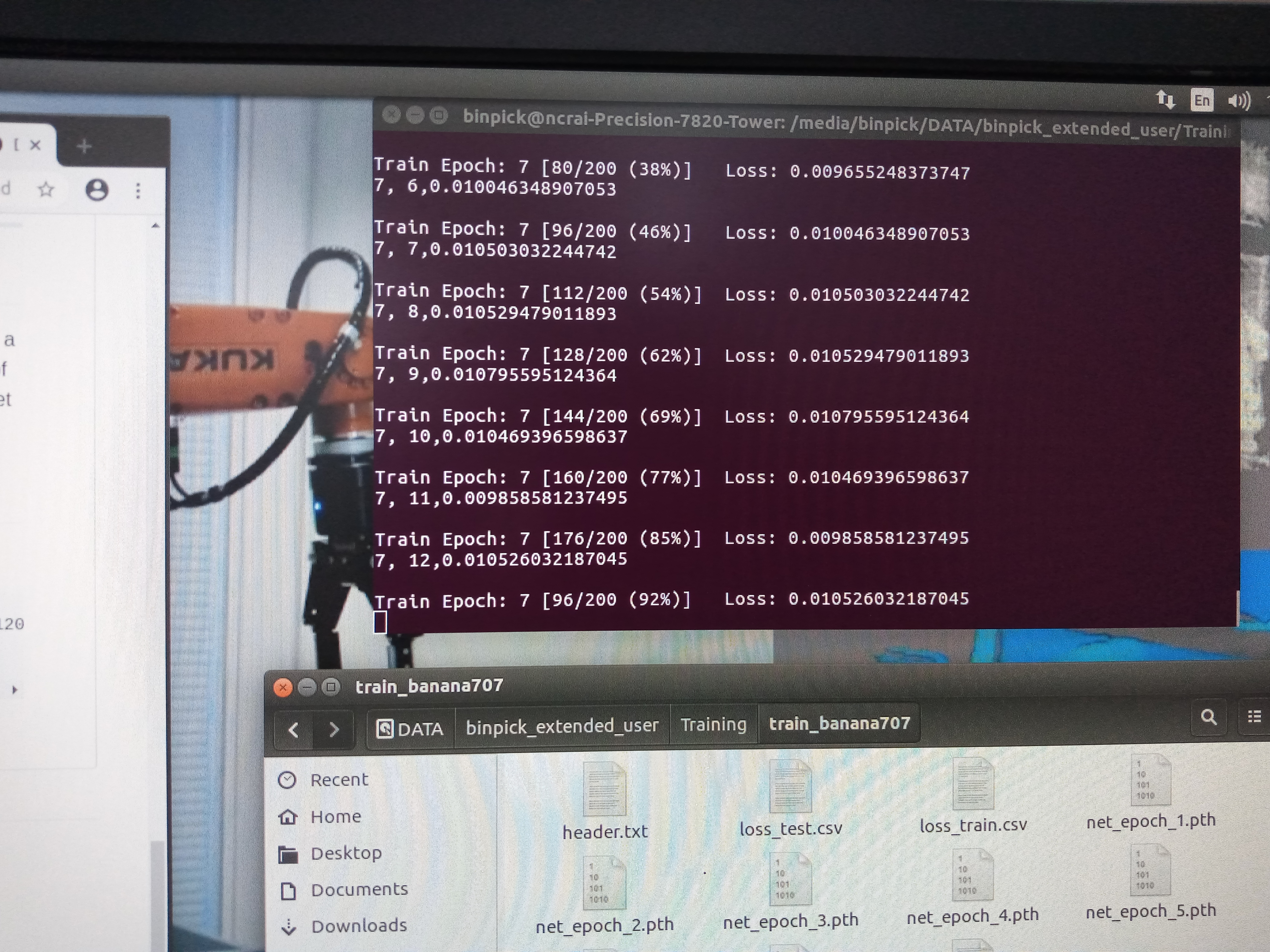

Training and Testing for EvaluationThis network was implemented using PyTorch v0.4. The VGG-19 feature extractions were taken from publicly available trained weights in torchvision open models. The networks were trained for 60 epochs with a batchsize of 16 for this Demo object. Adam was used as the optimizer with learning rate set at 0.0001. The system was trained on an Dell Precision 7820 workstation (containing NVIDIA P4000 16GB GPU), and testing used same.

For more, Download the Dataset from https://research.nvidia.com/publication/2018-06_Falling-Things and train the objects by https://github.com/avinashsen707/AUBOi5-D435-ROS-DOPE/blob/master/dope/scripts/train.py to get respective weights.

Debugging Object Detection on Devset : Results :

Debugging Pose Estimation on Test Devset : Results :